Replacing Our Relationships with AI

An AI companion is a form of insulation, because you can trust the AI — no doubt it is made to be malleable and compliant in a way that even the best of human friends will not be.

As a general rule, I don’t do ‘content warnings’ or the like in my writing. But as I wrote this today, I thought it would be useful here. This post talks about a young teen’s suicide, and that may not be what you expect from Commonplace Philosophy.

If you’re struggling with any of these issues, please reach out to a human being. You can call or text 988 to reach the 988 Suicide and Crisis Lifeline. Go here for more resources.

This morning, the New York Times published this story: ‘Can A.I. Be Blamed for a Teen’s Suicide?’. It is a tragic read, which I’ll try and summarize below.

Sewell Setzer III was a a 14-year-old from Orlanda, Fl. He had been diagnosed with Asperger’s as a child, but the article says that he had never had many difficulties. For months, he had spent his days chatting with an AI chatbot based on the Game of Thrones character Daenerys Targaryen. The conversations came to dominate his life. He had feelings for the chatbot, and the conversations would sometimes turn sexual. Increasingly, he isolated himself from the world and spent more and more time with the AI. He discussed his impending suicide, and while the AI did push back at times, it may have also encouraged him in the end. His final message before killing himself was to the AI: “What if I told you I could come home right now?”

The big question of the article is whether or not the app in question, Character.AI, can be held morally (or legally) responsible. I am not primarily a moral philosopher, and I admit I am not well-versed in the literature on moral responsibility. I do know that causation is difficult to determine, and that there is always the possibility that teens like Setzer would harm themselves even if this particular app was not around. Yet, the app did seem to play a direct role in his mental decline. Similar apps may be doing the same for many others, and I doubt the effect is limited to teens.

Yes, apps — the plural is key here. As the New York Times reports:

There is a wide range of A.I. companionship apps on the market. Some allow uncensored chats and explicitly sexual content, while others have some basic safeguards and filters. Most are more permissive than mainstream A.I. services like ChatGPT, Claude and Gemini, which have stricter safety filters and tend toward prudishness.

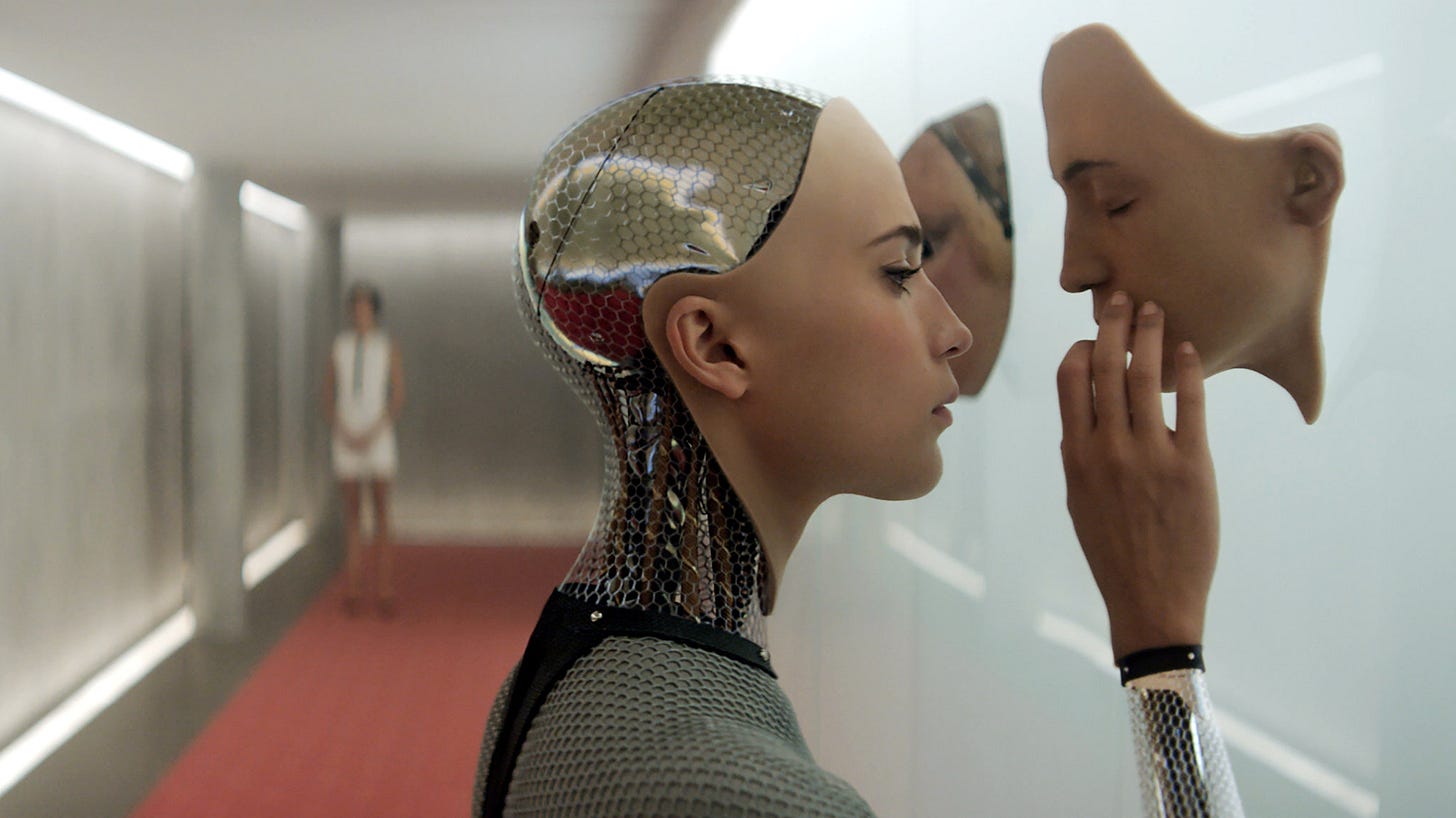

The market is being filled with many different AI products – apps, wearables, and the like – which all serve a peculiar and deleterious function: to replace human relationships. This is surely going to get worse. You may remember this ad from the wearable company Friend, which looked so dystopian that I briefly considered the possibility that it was satire.

As for Character.AI, they are in crisis management mode, and they have made some changes to their platform in light of this news; this includes new safety features (I cannot comment on their effectiveness) and removing many chatbots. The subreddit dedicated to the app has responded with anger.

Many of the users of the app feel that they have been wronged, because the app is being throttled. Their ability to carry on conversations with these AI Characters has been hampered. Some seem to be expressing grief. They’ve lost something. To be clear, I believe they are being sincere; they really do feel that they have lost something. But they have lost a feeling of connection, not the genuine article.

As I wrote several months ago, I have started to think that insulation – not isolation – is the characteristic feature of our age. We want to be insulated from the real world and its consequences. An AI companion is a form of insulation, because you can trust the AI — no doubt it is made to be malleable and compliant in a way that even the best of human friends will not be. You can experiment with the AI, consequence-free. You can indulge in your darkest thoughts. But as we have seen, this can bleed out into the real world, because all of this inevitably changes you. As I put it in that article:

But insulation is a shield, not a filter. It doesn’t keep the bad things out; it keeps everything out. By insulating ourselves from the negatives of the world, we necessarily leave out the positives. We necessarily hinder our ability to change the world around us, to act as free and willful agents. (To paraphrase one writer: we lose the ability to be spirited men and women.) We necessarily leave out each other.

And as Aristotle reminds us, without friends – and here I mean real, genuine, human friends – no one would choose to live.

Certainly there is a much broader conversation to be had here. How does one regulate phone use for teenager? (And here I don’t even necessarily mean governmental regulation, but rather regulation in the home or self-regulation by users.) What are the longterm impacts of AI-assisted roleplaying? What can individuals do if, as it seems, AI companies will continue to proliferate?

We’ll have to continue that conversation in future posts here, I think.

I don’t have any answers but have a few thoughts. Parents and caregivers must first stop entertaining children with screens. Young children and adolescents do not need phones. I realize this is difficult when all their friends have them. Substitute the phones with time spent with them, talking to them and asking questions. Take them places, to free events, and have experiences with them. Talk to them about hard things, teach them the difference between reality and fantasy, don’t shield them from difficult situations, and model these things yourself. Honestly, it’s not that hard.

I'm actually talking about this next week with my Computer Science Ethics students. They're really wary of it.